Posts by Archana

Knowledge Distillation in Neural Networks

Knowledge Distillation

In updates, By archana, Dec 31, 2021We got funded!

Every open source project dreams of being able to take on its wings and become successful. But we know these projects need effort, time and above all the funding to help support the d...

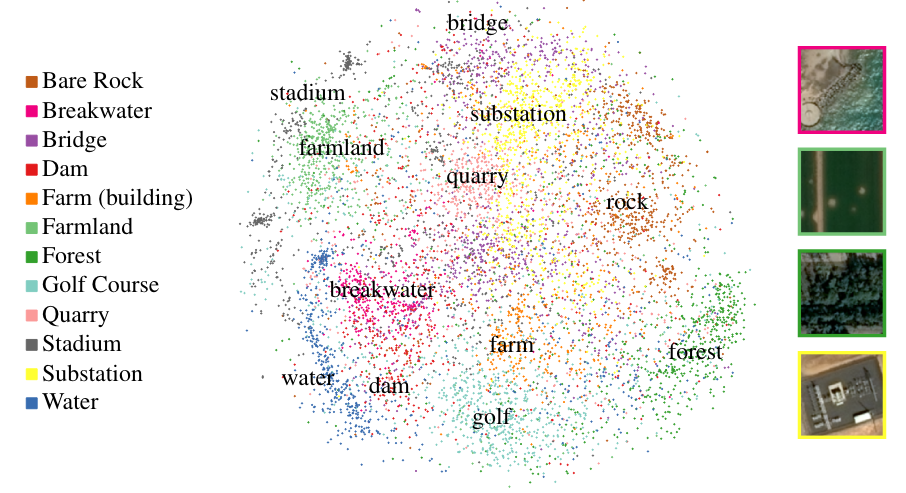

In updates, By archana, Dec 23, 2021Contrastive Sesion Fusion and its Applications in TinyML

Often times the data your model is trained with will be different from the data it received when deployed. For instance, in an image classification task, your training data may have b...

In paper review, By archana, Sep 01, 2021