Learning TinyML is Hard. Here is what ScaleDown is trying to do about it

A few days ago, we announced that our project received the No Starch Press Foundation grant. Their decision has given us the motivati...

Learning TinyML is Hard. Here is what ScaleDown is trying to do about it

A few days ago, we announced that our project received the No Starch Press Foundation grant. Their decision has given us the motivati...

Read MoreWe got funded!

Every open source project dreams of being able to take on its wings and become successful. But we know these projects need effort, ti...

Read MoreIntroducing ScaleDown

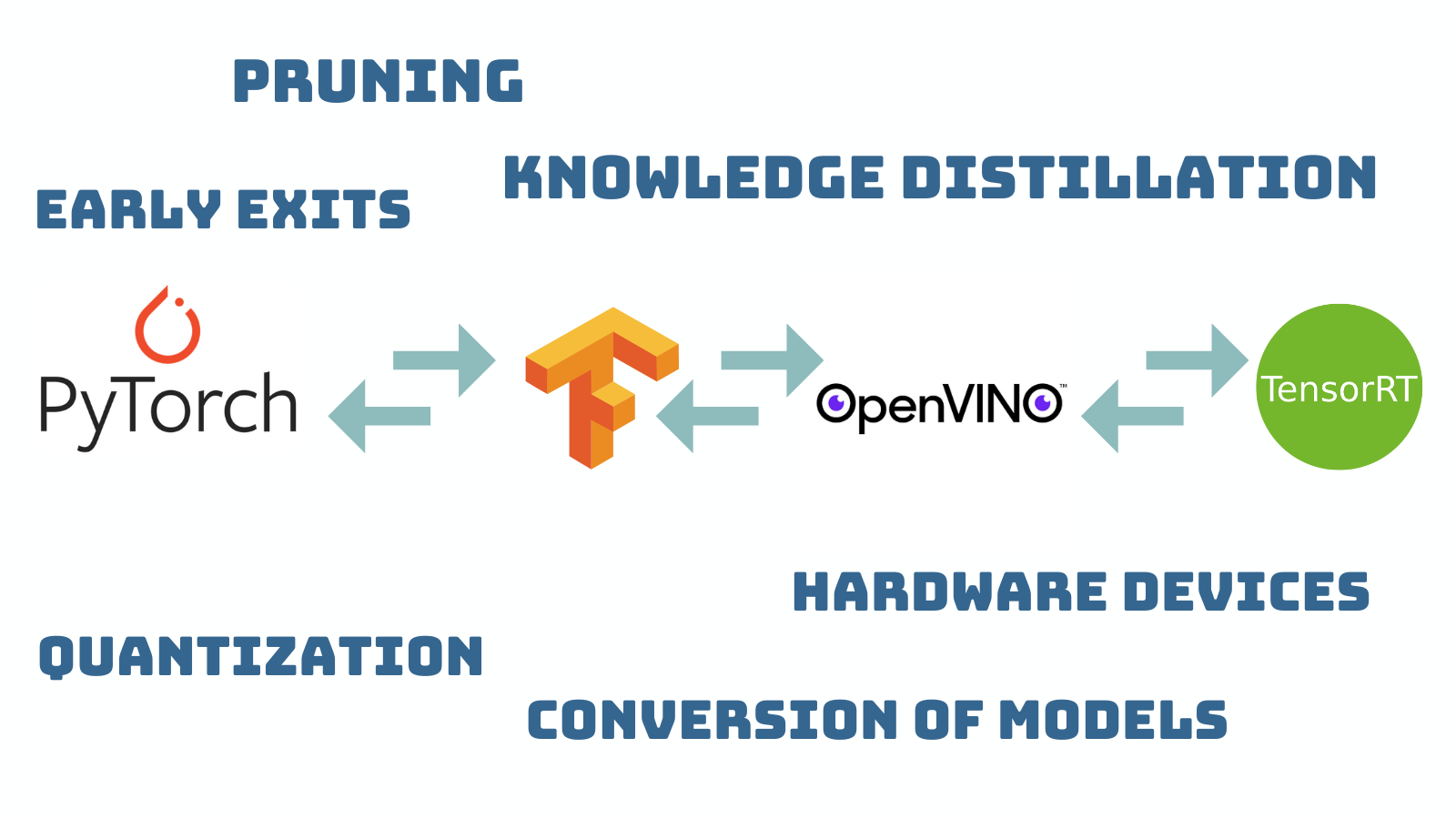

Over the last decade, the popularity of smart connected devices has led to an exponential growth of Internet of Things (IoT) devices....

Read MoreAll Stories

Learning TinyML is Hard. Here is what ScaleDown is trying to do about it

A few days ago, we announced that our project received the No Starch Press Foundation grant. Their decision has given us the motivation to continue working on this project and the con...

In education, By soham, Jan 01, 2022Knowledge Distillation in Neural Networks

Knowledge Distillation

In updates, By archana, Dec 31, 2021We got funded!

Every open source project dreams of being able to take on its wings and become successful. But we know these projects need effort, time and above all the funding to help support the d...

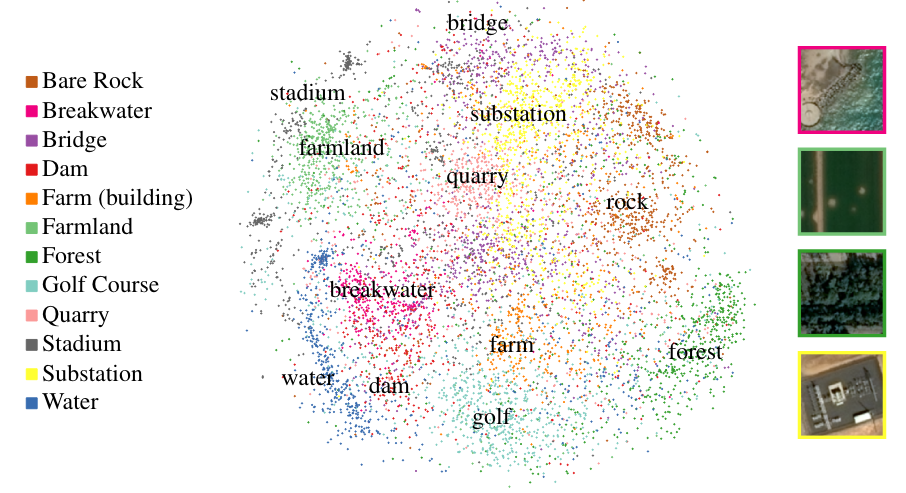

In updates, By archana, Dec 23, 2021Contrastive Sesion Fusion and its Applications in TinyML

Often times the data your model is trained with will be different from the data it received when deployed. For instance, in an image classification task, your training data may have b...

In paper review, By archana, Sep 01, 2021Some More Tricks to Shrink CNNs for TinyML

Pete Warden had written a post about

In Computer Vision, By soham, Aug 18, 2021Introducing ScaleDown

Over the last decade, the popularity of smart connected devices has led to an exponential growth of Internet of Things (IoT) devices. Around the same time, the availability of a vast ...

In updates, By soham, Jul 14, 2021Featured

-

Learning TinyML is Hard. Here is what ScaleDown is trying to do about it

In education, By soham, -

Quantization in Neural Networks

In updates, By archana, -

Knowledge Distillation in Neural Networks

In updates, By archana, -

We got funded!

In updates, By archana, -

Contrastive Sesion Fusion and its Applications in TinyML

In paper review, By archana, -

Some More Tricks to Shrink CNNs for TinyML

In Computer Vision, By soham,